%%%%%%% TARGET PRIMARY NODE CONFIGURATIONS %%%%%%

******* SOURCE NODE DETAILS: *******

Take backup of ORACLE_HOME using below command:

$ nohup tar -cvzf /backup/ORACLE_HOME.tar.gz 12.1.0 &

Once backup done transfer it to TARGET node backup location.

******* TARGET NODE DETAILS: *******

#5.1 Target System Primary Node Configuration (Clone

Initial Node):

ORACLE_HOME Location->

Make directory as below:

$ mkdir -p /u01/12.1.0.1/product/TEST/db/tech_st

Uncompress Source ORACLE_HOME backup to target node directory

created above.

$ nohup tar -xvzf ORACLE_HOME.tar.gz -C

/u01/12.1.0.1/product/TEST/db/tech_st

#5.1.2 Create pairsfile.txt File for Primary Node

Create a [NEW_ORACLE_HOME]/appsutils/clone/pairsfile.txt text

file with contents as shown below:

s_undo_tablespace=[UNDOTBS1 for Initial Node]

s_dbClusterInst=[Total number of Instances in a cluster e.g. 2]

s_db_oh=[Location of new ORACLE_HOME]

Eg:

s_undo_tablespace=UNDOTBS1

s_dbClusterInst=2

s_db_oh=/u01/12.1.0.1/product/TEST/db/tech_st/12.1.0

#5.1.3 Create Context File for Primary Node

Execute the following command to create a new context file.

Navigate to [NEW_ORACLE_HOME]/appsutil/clone/bin and run the

adclonectx.pl utility with the following parameters:

perl adclonectx.pl \

contextfile=[PATH to OLD Source RAC contextfile.xml] \

template=[NEW ORACLE_HOME]/appsutil/template/adxdbctx.tmp

\

pairsfile=[NEW ORACLE_HOME]/appsutil/clone/pairsfile.txt

\

initialnode

Eg:

Note:

For OLD Source RAC contextfile.xml copy the CONTEXT_FILE of

Primary RAC node(Master Node) to target backup location.

perl adclonectx.pl \

contextfile=/backup/SOURCE_CONTEXT_FILE_PROD/PROD_hostname.xml \

template=/u01/12.1.0.1/product/TEST/db/tech_st/12.1.0/appsutil/template/adxdbctx.tmp

\

pairsfile=/u01/12.1.0.1/product/TEST/db/tech_st/12.1.0/appsutil/clone/pairsfile.txt

\

initialnode

Note:

A new and unique global database name (DB name) must be selected

when creating the new target system context file. Do not use the source system

global database name or sid name uring any of the context file interview

prompts as shown below

You will be present with the following questions [sample answers

provided]:

Target System Hostname (virtual or

normal) [Current hostname]:

[Enter appropriate value if not defaulted]

Do you want the inputs to be validated (y/n) [n] ? : [Enter n]

Target Instance is RAC (y/n) [y] : [Enter y]

Target System Database Name : [Enter new desired global DB name, not a

SID]

Do you want to enable SCAN addresses

(y/n) [y] ? : [if y, answer next 2 questions]

Specify value for SCAN Name : [target system SCAN name, if SCAN

addresses enabled]

Specify value for SCAN Port : [target system SCAN port, if SCAN

addresses enabled]

Do you want the target system to have

the same port values as the source

system (y/n) [y] ? : [If the answer to this prompt is 'n' or if the

source port pool is not free on the

current node, enter the value for the next

prompt]

Target System Port Pool [0-99] :

<provide the port_pool>

Provide information for the initial RAC node:

Host name [Current hostname] : [Always need to change this value to the

current

public machine name]

Virtual Host name [null] : [Enter the Clusterware VIP interconnect name]

Instance number [1] : 1 [Enter 1, as this will always be the instance

number when you are on the primary target node]

Private interconnect name : [<Private interconnect name>]

Target System Base Directory : [Enter the base directory that contains

the new_oh_loc

dir]

Oracle OS User [oracle] : [Should default to correct current user; just

press enter]

Oracle OS Group [dba] : [Should default to correct current group; just

press enter]

Target System utl_file_dir Directory List : /usr/tmp [Specify an appropriate location for your requirements]

Number of DATA_TOP's on the Target System [2] : 1 [At present, you can

only have one data_top with RAC-To-RAC cloning]

Target System DATA_TOP Directory 1 : [The shared storage location; ASM

diskgroup/NetApps NFS mount point/OCFS2 mount point for the data directory]

Do you want to preserve the Display [null] (y/n) ? : [Respond according

to

your requirements]

New context path and file name

[<ORACLE_HOME>/appsutil/<CONTEXT_NAME>.xml]

: [Double-check proposed location, and amend if needed]

Note:

If cloning to ASM, a full path is

needed. For example:Target System DATA_TOP Directory 1 : +DATA/dbfile/VISION

This path must be created on the ASM system target manually.

#5.1.3 Configure RDBMS_ORACLE_HOME by executing following

command:

$ perl adcfgclone.pl dbTechStack [Full path to the database

context file

created in previous step]

Eg:

$ perl adcfgclone.pl dbTechStack

/u01/12.1.0.1/product/TEST/db/tech_st/12.1.0/appsutil/TEST_hostname.xml

Create the target database control files manually (if needed) or

modify the existing control files as needed to define datafile, redo and

archive log locations along with any other relevant and required setting.

In this step, you copy and recreate the database using your

preferred method, such as RMAN restore, Flash Copy, Snap View, or Mirror View.

@@@@@@@@@@ RMAN DATABASE CLONING @@@@@@@@@

We will follow below steps for RMAN database backup restore:

Below are some commands and steps to restore database using

conventional RMAN restore steps:

Note:

These steps are to be performed on TARGET server and before

dropping the TARGET instance.

SQL> spool DB_NAME_bef_drop_DATE.txt

SQL> select

instance_name,status,name,database_role,open_mode, from

gv$instance,gv$database;

SQL> select name from v$datafile order by file#;

SQL> select name from v$controlfile;

SQL> select member from v$logfile;

SQL> select file_name from dba_temp_files;

SQL> archive log list;

SQL> show parameter dump;

SQL> show parameter spfile;

---Control file trace

SQL> alter database backup controlfile to trace as

'PATH/cntrl/before_drp/cntrl.txt';

SQL> create pfile='PATH/pfile_DB_NAME.ora' from spfile;

SQL> spool off

Drop TARGET database:

edit pfile and make .cluster_database=FALSE ---- To

drop RAC database

Shut down RAC database and then follow below steps:

$ srvctl status database -d DBNAME -v

$ srvctl stop database -d DBNAME

$ srvctl status database -d DBNAME -v

SQL> startup mount restrict pfile='PATH/pfile_DB_NAME.ora';

SQL> select instance_name,status,name,database_role,open_mode,

from gv$instance,gv$database;

SQL> show parameter spfile;

SQL> drop database;

Note:

Please keep the alert log open to monitor database drop.

Login to asm and check the diskgroups for the

datafiles,controlfiles,parameter files, redo logs, temp files,

They should be empty as the database has been ddropped.

Edit the above created pfile with DB_NAME='PROD'

$ export ORACLE_SID=PROD

$ sqlplus / as sysdba

SQL> startup nomount pfile='PATH/pfile_DB_NAME.ora';

SQL> select instance_name from v$instance;

Restore controlfile from backup piece:

$ rman target /

rman> restrore controlfile from 'CNTRL_FILE_BKP_PIECE';

$ sqlplus / as sysdba

SQL> select instance_name from v$instance;

SQL> alter database mount;

SQL> select name,open_mode from v$database;

Catalog backup pieces:

$ rman target /

rman> catalog start with 'BKP_PIECE_PATH';

Set new name command on TARGET node after mount:

$ sqlplus / as sysdba

SQL> select name,open_mode from v$database;

SQL> set page size 900

SQL> set line size 900

SQL> select ' set newname for datafile ' || '' || FILE# || ''

|| ' to ''+DISK_GRP' ||reverse

(substr(reverse(name),0,instr(reverse(name),'/')-1)) || ''';' from v$datafile;

The above command will set the new name for the datafiles as per

TARGET node env, Copy the output and paste it in the run block.

restore the database using set new name run block:

Eg:

{

run

allocate channel ch1 device type disk;

allocate channel ch2 device type disk;

allocate channel ch3 device type disk;

allocate channel ch4 device type disk;

set new name output paste here

restore database;

switch datafile all;

recover database;

release channel ch1 device type disk;

release channel ch2 device type disk;

release channel ch3 device type disk;

release channel ch4 device type disk;

}

Check rman restore progress using below commands:

TTITLE LEFT '% Completed. Aggregate is the overall progress:'

SET LINE 132

SELECT opname, round(sofar/totalwork*100) "% Complete"

FROM gv$session_longops

WHERE opname LIKE 'RMAN%'

AND totalwork != 0

AND sofar <> totalwork

ORDER BY 1;

TTITLE LEFT 'Channels waiting:'

COL client_info FORMAT A15 TRUNC

COL event FORMAT A20 TRUNC

COL state FORMAT A7

COL wait FORMAT 999.90 HEAD "Min waiting"

SELECT s.sid, p.spid, s.client_info, status, event, state,

seconds_in_wait/60 wait

FROM gv$process p, gv$session s

WHERE p.addr = s.paddr

AND client_info LIKE 'rman%';

TTITLE LEFT 'Files currently being written to:'

COL filename FORMAT a50

SELECT filename, bytes, io_count

FROM v$backup_async_io

WHERE status='IN PROGRESS'

/

Once recovery done, backup the control file to trace:

SQL> alter database backup controlfile to trace as

'PATH/cntrl/after_restore/cntrl.txt';

SQL> select name,free_mb,total_mb from v$asm_diskgroup;

SQL> select name,open_mode from v$database;

SQL> shutdown immediate

Change the DB_NAME in pfile to the instance that you are cloning

to.

Login to asm:

Take bkp of all the existing control files then, remove them and

follow below steps:

From the control file trace modify the control file as per the

requirement.

$ export ORACLE_SID=INST_NAME

$ sqlplus / as sysdba

SQL> startup nomount pfile='PATH/pfile_DB_NAME.ora';

SQL> select instance_name from v$instance;

SQL> @run_cntrl.txt

---- Modified

trace file run

SQL> select name,open_mode,log_mode from v$database;

SQL> alter database open resetlogs;

Note:

Keep the alert log open and check which temp tablespaces are

empty and add temp files accordingly.

Add temp files:

SQL> alter tablespace TEMP1 add temp files '+DISK_GROUP' size

4G;

SQL> alter tablespace TEMP2 add temp files '+DISK_GROUP' size

4G;

SQL> select name,open_mode,log_mode from v$database;

SQL> shutdown immediate

Edit pfile and make .cluster_database=TRUE ---- To

enable RAC database

$ export ORACLE_SID=INST_NAME

SQL> startup nomount pfile='PATH/pfile_DB_NAME.ora';

SQL> select instance_name from v$instance;

SQL> alter database mount;

SQL> alter database open;

SQL> select name,open_mode,log_mode from v$database;

SQL> select instance_name,status,name,database_role,open_mode,

from gv$instance,gv$database;

SQL> select INST_ID,group#,thread#,members,bytes from

gv$log;

SQL> select INST_ID,group#,member from gv$logfile;

If required enable thread:

SQL> alter database enable public thread 2;

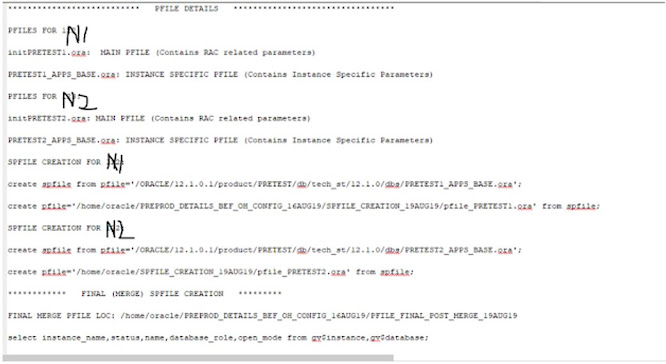

Create spfile from pfile:

SQL> select

instance_name,status,name,database_role,open_mode, from

gv$instance,gv$database;

SQL> create spfile='+DISK_GROUP' from

pfile='PATH/pfile_DB_NAME.ora';

SQL> shutdown immediate

Start the RAC database:

$ srvctl status database -d DBNAME -v

$ srvctl start database -d DBNAME

$ srvctl status database -d DBNAME -v

Start the new target RAC database in open mode.

Run the library update script against the RAC database.

$ cd [RDBMS ORACLE_HOME]/appsutil/install/[CONTEXT_NAME]

$ sqlplus "/ as sysdba" @adupdlib.sql [libext]

Where [libext] should be set to 'sl' for HP-UX, 'so' for any

other UNIX platform, or 'dll' for Windows.

Configure the primary target database

The database must be running and open before performing this

step.

$ cd [RDBMS ORACLE_HOME]/appsutil/clone/bin

$ perl adcfgclone.pl dbconfig [Database target context file]

Where Database target context file is: [RDBMS

ORACLE_HOME]/appsutil/[Target CONTEXT_NAME].xml.

Eg:

$ perl adcfgclone.pl dbconfig /u01/12.1.0.1/product/TEST/db/tech_st/12.1.0/appsutil/TEST_hostname.xml

Note:

The dbconfig option will

configure the database with the required settings for the new target, but it

will not recreate the control files.

%%%%%% TARGET SECONDARY NODE CONFIGURATIONS %%%%%%%

Follow the steps below to clone the

secondary nodes (for example, Node 2) on to the target system.

5.2.1 Add Secondary RAC node information to sqlnet.ora

This is a two-node Oracle RAC example.

Go to the primary node and add the

secondary node information to the sqlnet.ora file:

RAC node 1: host1.example.com

RAC node 1 vip: host1-vip.example.com

RAC node 2: host2.example.com

RAC node 2 vip: host2-vip.example.com

Open the $ORACLE_HOME/network/admin/<context>/sqlnet.ora

file for editing, and add the Oracle RAC Node 2 information by changing the

line shown.

FROM:

tcp.invited_nodes=(host1.example.com, host1-vip.example.com)

TO:

tcp.invited_nodes=(host1.example.com, host1-vip.example.com, host2.example.com,

host2-vip.example.com)

Then reload the listener to reflect

the change.

Note:

The host1 entries should be there

after a successful clone.

#5.2.2 Uncompress the archived ORACLE_HOME transferred

from the Source System

Uncompress the source system ORACLE_HOME archive to a location

matching that present on your target system primary node. The directory

structure should match that present on the newly created target system primary

node.

$ tar -xvzf rac_db_oh.tgz

#5.2.3 Archive the [ORACLE_HOME]/appsutil directory

structure from the new Primary Node

Log in to the new target system primary node, and execute the

following commands:

$ cd [ORACLE_HOME]

$ zip -r appsutil_node1.zip appsutil

#5.2.4 Copy appsutil_node1.zip to the Secondary Target

Node

Transfer and then expand the appsutil_node1.zip into the

secondary target RAC node [NEW ORACLE_HOME].

$ cd [NEW ORACLE_HOME]

$ unzip -o appsutil_node1.zip

#5.2.5 Update pairsfile.txt for the Secondary Target Node

Alter the existing pairsfile.txt (from the first target node)

and change the s_undo_tablespace parameter. As this is the second node, the

correct value would be UNDOTBS2. As an example, the

[NEW_ORACLE_HOME]/appsutils/clone/pairsfile.txt would look like:

s_undo_tablespace=[Or UNDOTBS(+1) for additional Nodes]

s_dbClusterInst=[Total number of Instances in a cluster e.g. 2]

s_db_oh=[Location of new ORACLE_HOME]

Eg:

s_undo_tablespace=UNDOTBS2

s_dbClusterInst=2

s_db_oh=/u01/12.1.0.1/product/TEST/db/tech_st/12.1.0

#5.2.6 Create a Context File for the Secondary Node

Navigate to [NEW_ORACLE_HOME]/appsutil/clone/bin and run the

adclonectx.pl utility as follows:

$ perl adclonectx.pl \

contextfile=[Path to Existing Context File from the First

Node] \

template=[NEW ORACLE_HOME]/appsutil/template/adxdbctx.tmp

\

pairsfile=[NEW ORACLE_HOME]/appsutil/clone/pairsfile.txt

\

addnode

Note:

In path to existing CONTEXT_FILE, please give the path to the

INSTANCE 1 CONTEXT_FILE that is configured above.

Note:

When answering the questions below, review your responses

carefully before entering them. The rest of the inputs (not shown) are the same

as those encountered during the context file creation on the initial node

(primary node).

Host name of the live RAC node : [Hostname of RAC Node 1]

Domain name of the live

RAC node : [Domain name of RAC Node 1]

Database SID of the live

RAC node : [enter the individual SID of RAC Node 1, NOT the Global DB name]

Listener port number of

the live RAC node : [enter the port number of the

Primary Target Node

you just created]

Provide information for

the new Node:

Host name : <Current

hostname>[enter appropriate value if not defaulted]

Virtual Host name : [enter the Clusterware VIP interconnect name]

Instance number : 2

[enter the instance # for this current node]

Private interconnect

name : [enter the private interconnect

name]

Current Node:

Host Name : <Current

Hostname>

SID : <Database SID

of current RAC node>

Instance Name :

<Instance name of current RAC node>

Instance Number :

<Instance number>

Instance Thread :

<Instance thread>

Undo Table Space:

<Undo tablespace name provided in pairs file>

Listener Port :

<Listener port>

Target System quorum

disk location required for cluster manager and node

monitor : [legacy parameter, enter /tmp]

Target System Base Directory : [Enter the base directory that

contains the new_oh_loc dir]

Oracle OS User [oracle] :

Oracle OS Group [dba] :

Target System utl_file_dir Directory List : /usr/tmp [Specify an

appropriate location for your requirements]

Number of DATA_TOP's on the Target System [2] : 1 [At present,

you can only have one data_top with RAC-To-RAC cloning]

Target System DATA_TOP Directory 1 :

[The shared storage location; ASM diskgroup/NetApps NFS mount point/OCFS2 mount point]

Do you want to preserve the Display [null] (y/n) ? : [Respond

according to your requirements]

Target System Display [null] : [Respond according to your

requirements]

New context path and file name

[<ORACLE_HOME>/appsutil/<CONTEXT_NAME>.xml] :

[Double-check proposed location, and

amend if needed]:

Note:

At the conclusion of these "interview" questions

related to context file creation, look carefully at the generated context file

and ensure that the values contained therein compare to the values entered

during context file creation on Node 1. The values should be almost identical,

a small but important exception being the local instance name will have a

number 2 instead of a 1.

#5.2.7 Configure NEW ORACLE_HOME

Run the commands below to move to the correct directory and

continue the cloning process:

$ cd [NEW ORACLE_HOME]/appsutil/clone/bin

$ perl adcfgclone.pl dbTechStack [Full path to the database

context file created in previous step]

Note:

At the conclusion of this command, you will receive a console

message indicating that the process exited with status 1 and that the

addlnctl.sh script failed to start a listener named [SID]. That is expected, as

this is not the proper service name. Start the proper listener by executing the

following command:

[NEW_ORACLE_HOME]/appsutil/scripts/[CONTEXT_NAME]/addlnctl.sh

start LISTENER_[hostname].

This command will start the correct (RAC-specific) listener with

the proper service name.

Note:

If

the database version is 12c Release 1, ensure to add the following line in your

sqlnet_ifile.ora after adcfgclone.pl execution completes:

- SQLNET.ALLOWED_LOGON_VERSION_SERVER

= 8 (if the initialization

parameter SEC_CASE_SENSITIVE_LOGON is set to FALSE)

- SQLNET.ALLOWED_LOGON_VERSION_SERVER

= 10 (if SEC_CASE_SENSITIVE_LOGON is set to TRUE)

Note:

If this secondary node uses ASM with database job role

separation, execute the following commands as the grid user to set

correct permissions and group for the oracle executable in the Database Oracle

Home.

$ cd <Grid Home>/bin

$ ./setasmgidwrap o=<ORACLE_HOME>/bin/oracle

#5.2.8 Source the new environment file in the ORACLE_HOME

Run the commands below to move to the correct directory and

source the environment:

$ cd [NEW ORACLE_HOME]

$ ./[CONTEXT_NAME].env

#5.2.9 Modify [SID]_APPS_BASE.ora

Edit the [SID]_APPS_BASE.ora file and change the control file parameter

to reflect the correct control file location on the shared storage. This will

be the same value as in the [SID]_APPS_BASE.ora on the target system primary

node which was just created.

#5.2.10 Start Oracle RAC Database

Start the database using the following commands:

$ sqlplus /nolog

SQL> connect / as sysdba

SQL> startup

#5.2.11 Execute AutoConfig

Run AutoConfig to generate the proper listener.ora and

tnsnames.ora files:

$ cd $ORACLE_HOME/appsutil/scripts/$CONTEXT_NAME

$ ./adautocfg.sh appspass=[APPS Password]

If AutoConfig fails, and you see any

"TNS" errors in the AutoConfig log files, you should ensure the

listeners are registered properly, and then re-run AutoConfig on the second

node.

#5.3 Carry Out Target System (Primary Node) Final Oracle

RAC Configuration Tasks

#5.3.1 Recreate TNSNAMES and LISTENER.ORA

Login again to the target primary node (Node 1) and run

AutoConfig to perform the final Oracle RAC configuration and create new

listener.ora and tnsnames.ora (as the FND_NODES table did not contain the

second node hostname until AutoConfig was run on the secondary target RAC

node).

$ cd $ORACLE_HOME/appsutil/scripts/[CONTEXT_NAME]

$ ./adautocfg.sh appspass=[APPS Password]

Note:

This execution of AutoConfig on the

primary target Oracle RAC Node 1 will add the second Oracle RAC Node connection

information to the first node's tnsnames.ora, such that listener load balancing

can occur. If you have more than two nodes in your new target system cluster,

you must repeat Sections 4.2 and 4.3 for all subsequent nodes.

No comments:

Post a Comment